In a significant move toward protecting teen users, Meta EU age limit support has sparked attention across Europe. The tech giant is backing new proposals to raise the minimum age for social media access.

As part of this move, the EU social media regulation update 2025 could mandate platforms like Facebook, Instagram, Snapchat, and TikTok to restrict access for users under 16 or 17, tightening the current threshold of 13 years.

Let’s explore why Meta backs EU minimum age law, what it means for social media safety for teens, and how it may change digital habits in Europe and beyond.

What’s Behind the EU’s Push to Raise the Age?

The European Commission and Parliament are reviewing digital legislation that could increase the minimum legal age to access social media platforms. The rationale includes:

- Rising cases of cyberbullying and teen anxiety

- Worries over algorithmic addiction

- Concerns about data collection from minors

- Pressure from parents and child advocacy groups

This shift could amend the Digital Services Act (DSA) and General Data Protection Regulation (GDPR) to enforce stricter controls for minors. This also reflects a growing trend of digital accountability in Europe.

Why Meta Supports the Higher Age Limit

Meta (parent company of Facebook, Instagram, and Threads) has publicly endorsed the EU’s direction, a surprising yet strategic move. Here’s why:

Preempting Regulatory Backlash

By proactively aligning with the EU’s agenda, Meta avoids larger penalties and proves its corporate responsibility on child safety.

Building Long-Term Trust

Meta’s support may rebuild trust with European regulators and users, especially after past scrutiny over Instagram’s impact on teen mental health.

Platform Evolution

With the rise of AI content moderation and age verification tools, Meta has the tech infrastructure to support more nuanced user segmentation.

What Changes Could Be Implemented?

If the EU raises the social media age limit, several changes could roll out across platforms:

| Feature | Current | Post-Law Change |

|---|---|---|

| Minimum Age | 13 | 16 or 17 |

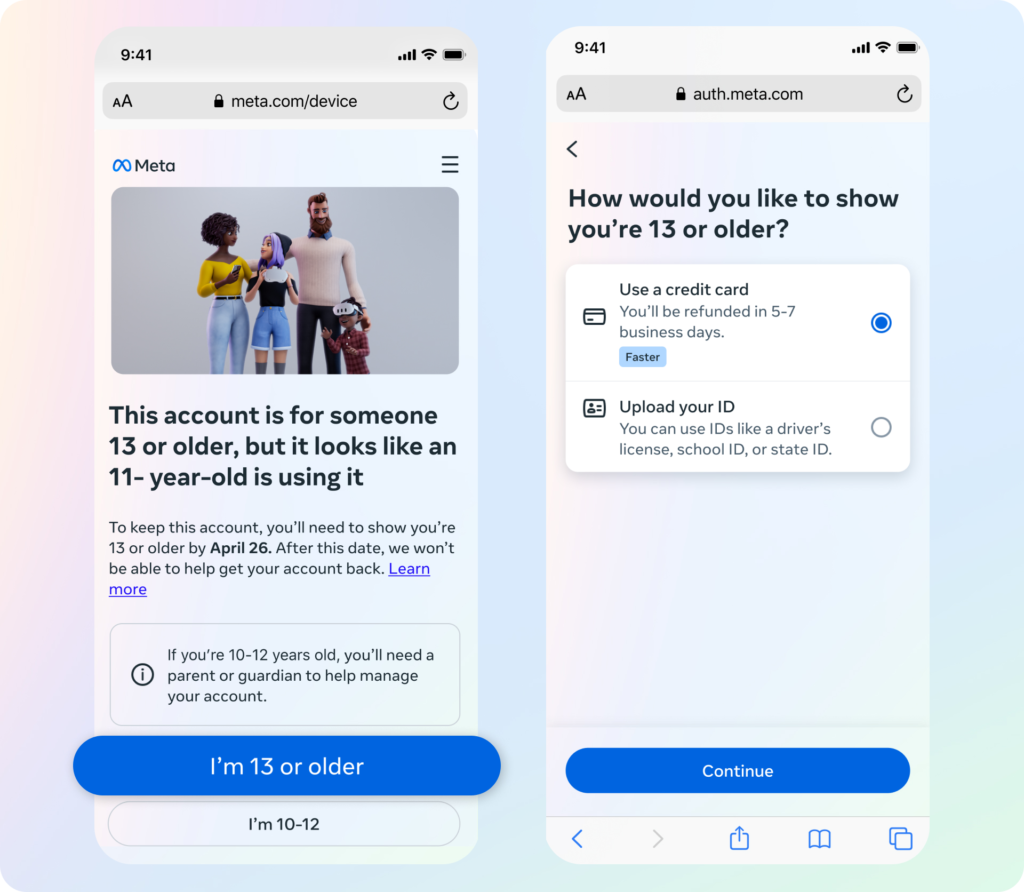

| Age Verification | Basic self-entry | Government ID, AI tools |

| Ads Targeted to Minors | Limited | Heavily Restricted or Banned |

| Mental Health Resources | Optional | Mandatory per platform |

| Parental Controls | Minimal | Expanded + Notification Systems |

This would push platforms to invest more in age verification technologies and content gating systems to comply with laws across 27 countries.

The Psychology Behind Raising the Age

Studies from the American Psychological Association (APA) and EU research bodies suggest that the age of 13 may be too early for kids to handle:

- Social comparison affects self-worth

- Algorithmic feeds can lead to compulsive use

- Exposure to harmful content increases vulnerability

Raising the age bar may offer developmental protection during critical teenage years. This aligns with the belief that mental health and digital access should evolve together.

How This Affects Privacy and Data Collection

The new policy could reshape how platforms collect and process data from teen users:

- Stricter GDPR enforcement on children’s data

- Platforms may require explicit parental consent for users under 18

- Potential ban on personalized ads for minors

This change could decrease ad revenue temporarily but builds a foundation for ethical digital ecosystems.

Impact on Platforms like TikTok, Snapchat, and Discord

While Meta is backing the EU’s proposal, other platforms may find this adjustment harder. TikTok and Snapchat, which have large teen user bases, could face:

- Massive account purges for underage users

- Drop in engagement and watch time

- Legal liabilities for non-compliance

Smaller or newer platforms will need to scale content moderation and revamp onboarding flows to match new norms.

Will This Influence Other Countries?

Yes. The EU’s regulatory influence is global. If this law is enforced:

- UK, Australia, and Canada may follow with similar laws

- The U.S. Congress could revisit COPPA and KOSA (Kids Online Safety Act)

- India may revise its Information Technology Rules to match Europe’s standard

The domino effect of digital regulation is real — and Meta’s early support helps it stay ahead of the curve globally.

What Parents and Teens Should Know

If the new EU rule becomes law:

- Teens under 16 or 17 may need parental approval to create accounts

- Platforms may restrict live features, DMs, or ad visibility

- Parents could get access to content moderation tools

- Social media “time-outs” or daily limits may become default

Parents must prepare for a shift in digital behavior and teens should understand why these changes protect their wellbeing.

A Shift Towards Ethical Social Media

Meta’s decision to back the EU minimum age proposal for social media signals a new chapter in digital responsibility. It acknowledges that social media, when introduced too early, can have unintended consequences on young minds.

By aligning with regulators and investing in safety-first features, Meta and others are being forced to prioritize ethics over engagement — a move that could redefine social media access in Europe and beyond.