Meta Platforms Inc., the parent company of Facebook, Instagram, and WhatsApp, is doubling down on artificial intelligence (AI) innovation with a monumental $10 billion investment in Scale AI—a leading data labeling and AI infrastructure firm. This aggressive push into AI training and infrastructure signals Meta’s ambition to dominate the AI race, not just participate in it.

With this investment, Meta aims to accelerate its AI model training capabilities, improve data annotation accuracy, and stay ahead of the competition in generative AI, machine learning, and large language models (LLMs). This blog explores the potential impact of this investment, what it means for the AI industry, and how Meta is positioning itself as a frontrunner in the future of artificial intelligence.

Why Meta Chose Scale AI for Its $10 Billion Investment

Trusted Partner for AI Infrastructure

Scale AI has built a strong reputation as one of the most trusted partners in the field of AI data labeling, synthetic data generation, and scalable infrastructure solutions for AI development. Known for its precise annotation workflows, the company has served major AI players like OpenAI, Anthropic, Microsoft, and the U.S. Department of Defense.

With Meta’s focus on improving AI model accuracy, training data quality, and real-time deployment, this investment is a calculated move to leverage Scale AI’s deep expertise and tools.

Scale AI’s Advantage in Data Labeling

High-quality training datasets are the backbone of successful AI systems. Scale AI specializes in providing meticulously annotated data across various domains including text, images, video, 3D spatial data, and more. By tapping into Scale AI’s capabilities, Meta aims to feed its models with high-volume, high-accuracy datasets, which are essential for building smarter AI systems.

Strategic Importance of the $10 Billion Investment

1. Supercharging Large Language Models (LLMs)

Meta’s ambition to compete with ChatGPT, Claude, and Gemini is evident with their LLaMA models (Large Language Model Meta AI). To scale LLaMA’s capabilities, Meta needs access to massive, clean, and diverse datasets.

With Scale AI’s proprietary data pipelines, Meta can train its next-generation models faster and more efficiently. This would allow Meta to release more advanced LLaMA versions capable of outperforming competitors in tasks like:

- Natural language processing (NLP)

- Sentiment analysis

- Text summarization

- Real-time multilingual translation

- Conversational AI

2. Fueling Generative AI Innovation

Meta has publicly shown its interest in generative AI models, including those for text, images (like Emu), and video (like Make-A-Video). To improve AI model training accuracy, this $10 billion investment in Scale AI’s synthetic data generation can be a turning point.

Generative AI models require:

- Massive, diverse training datasets

- Synthetic augmentation to cover edge cases

- Real-time validation loops

Scale AI offers all these capabilities through its scalable infrastructure. This is exactly what Meta needs to train safer, smarter, and faster generative models.

Impact on the Broader AI Ecosystem

Market Leadership in AI Training Infrastructure

With this deal, Meta sends a strong signal to the AI market—it is not just building AI applications, but also owning the AI infrastructure stack. This sets Meta apart from competitors like Google and Amazon, who often rely on hybrid infrastructure or third-party providers.

Enhancing Data Governance and Ethical AI

One key advantage of partnering with Scale AI is improved data transparency, model governance, and ethical AI implementation. As AI adoption grows globally, governments are pushing for stricter data accountability. Meta’s partnership with a reliable AI data company ensures:

- Compliance with global AI ethics regulations

- Reduction in AI model bias

- Improved explainability in LLM outputs

Meta’s Long-Term AI Strategy: Owning the Stack

Meta’s AI roadmap goes beyond just building smart assistants. It aims to build an AI ecosystem where:

- Data is sourced, annotated, and managed in-house

- Model architecture and training pipelines are fully optimized

- Deployment is faster and more aligned with user needs

This $10 billion move complements Meta’s in-house AI Research SuperCluster (RSC), PyTorch development, and FAIR (Facebook AI Research) initiatives. Combining internal R&D with Scale AI’s scalable infrastructure is a powerful strategy for long-term dominance.

Competitive Implications for Google, Amazon, and Microsoft

Google DeepMind and Gemini Models

While Google continues refining its Gemini AI models, it still depends on internal and open-source data. Meta’s access to high-precision, proprietary datasets from Scale AI could give it a training efficiency and accuracy advantage.

Microsoft and OpenAI Collaboration

Microsoft’s partnership with OpenAI focuses on application development and cloud deployment via Azure. However, Meta’s investment focuses more on infrastructure independence and data sovereignty, making its AI efforts less reliant on external platforms.

Amazon and AWS AI Tools

Amazon leads in cloud-based AI services, but Meta’s move signals a different play—full-stack AI dominance. By controlling the data and infrastructure, Meta could reduce dependency on AWS and bring AI operations fully under its ecosystem.

Key Takeaways

- Meta is investing $10 billion in Scale AI to boost its AI model training infrastructure, optimize data labeling, and fuel LLaMA and generative AI models.

- Scale AI’s data annotation, synthetic data, and infrastructure scale are vital to Meta’s long-term AI dominance.

- The partnership could redefine large language model performance, AI ethics, and infrastructure ownership in the competitive AI space.

- Meta is securing its future in AI not just by developing models—but by controlling the entire pipeline from data to deployment.

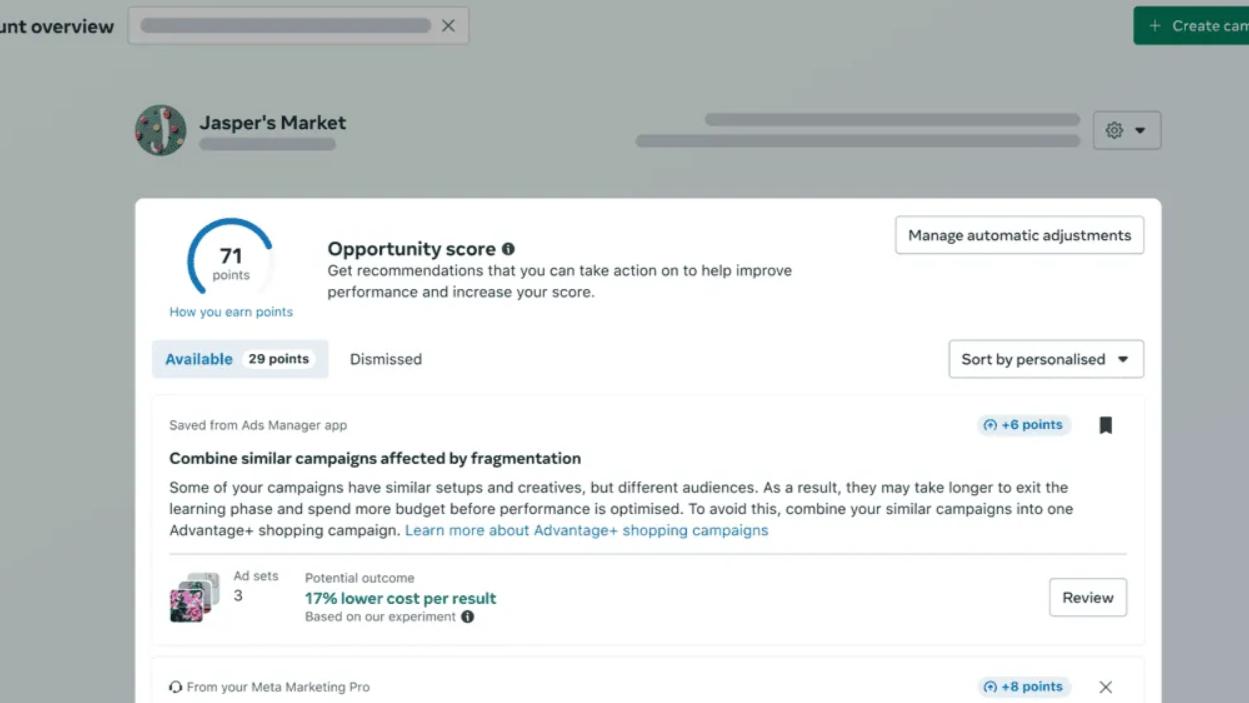

What This Means for Businesses and Developers

Meta’s move isn’t just a tech industry headline—it’s a wake-up call for businesses relying on AI:

- Expect better AI APIs and tools from Meta

- Look forward to more precise and faster AI responses

- Prepare for cost-effective AI integration options as Meta scales up model efficiency

Developers and enterprises should keep an eye on new Meta tools and SDKs powered by the enhanced training pipeline made possible through Scale AI.

Conclusion: The Future is Being Trained Now

Meta’s $10 billion bet on Scale AI is more than an investment—it’s a blueprint for owning the future of artificial intelligence. As the AI race heats up, those who control the data pipeline and model training ecosystem will set the rules.

With this bold move, Meta signals that it’s not just playing catch-up—it’s aiming to lead the AI revolution from the front.